Approaching design in the age of AI

A quick start guide to more thoughtful, responsible, and intelligent experiences

Disclaimer: Although I work on AI experiences at Microsoft, the views expressed here are my own.

Unless you’ve been living under a rock, you may have noticed that artificial intelligence is having a moment. It’s everywhere — in virtual assistants, customer service, college papers, your friends’ profile pictures, and screen protectors.

The sheer scale at which AI has exploded is evident in the market valuations of relative newcomers like OpenAI and Anthropic, as well as the massive investments being made at Microsoft (where I work), Google, and NVIDIA.

As someone who designs for AI experiences and has watched them evolve over the last few years, I’ve gotten to see everything happening in our industry firsthand — the hope, the hype, the trials, and tribulations.

I’ve also been asked a lot of questions about what it means to be a product designer right now, with so much change and ambiguity. Where is our place at the table when interactions with AI happen in chat? How can I integrate AI into my product? Should I? What even is responsible AI?

Back to basics

When most people think of AI user experiences, they think of chat. It’s not hard to see why. ChatGPT, Copilot, Gemini, Claude — all of these are popular, chat-centric experiences. It stands to reason, then, that all AI experiences should have some kind of chat functionality to add familiarity to the experience, right?

This singular decision point is the trap I most frequently see products (and designers) fall into. What makes artificial intelligence so promising and powerful isn’t its ability to chat (something we’ve had for a quarter century now), it’s the ability for it to infer and reason. The answer isn’t to simply phone it in with Yet Another Chat experience, but to return to our roots as the subject matter experts in end-to-end experiences.

Ask yourself:

How would my product benefit from a system that can understand and cater to user intent?

What pain points am I trying to solve with it?

How can I integrate AI into the fabric of my experiences in a way that is unobtrusive but available?

Will chat reduce friction for my users, or create new pain points?

Does my product even need AI in the first place?

Maybe the most important question is: am I leading with technology, or with customer need?

In that sense, designing for AI is just like designing for any other surface or interface!

We do research to understand how customers work right now, what’s preventing them from getting things done, and design experiences that don’t just address those pain points directly, but extrapolate and anticipate their needs before they do. We think holistically — about all of the services it connects to, about the platform it’s built on, about the first run experience and onboarding, and about user retention.

Of course, that’s where the similarities end.

Determining the non-deterministic

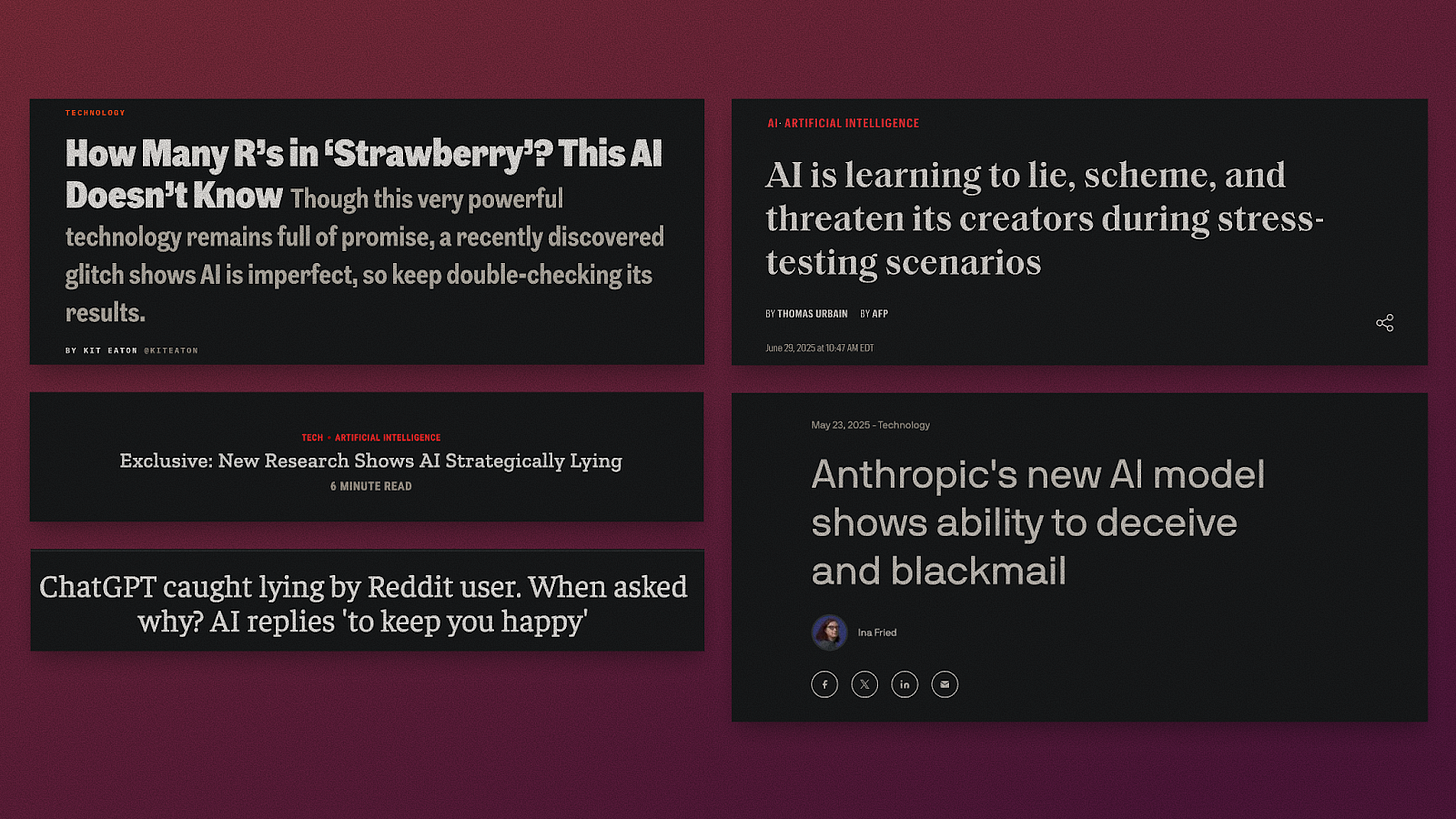

AI used to (and, depending on where you look, still does) lie. A lot. Look no further than the veritable deluge of posts showing users of various chat products asking them how many b’s or r’s are in “strawberry.”

Misinformation, hallucinations, and user perceptions of them aren’t just an engineering problem, but a design problem. Indeed, artificial intelligence is only as good as the information it has access to. So, how do we solve for this? Here are a few options to explore as you get started:

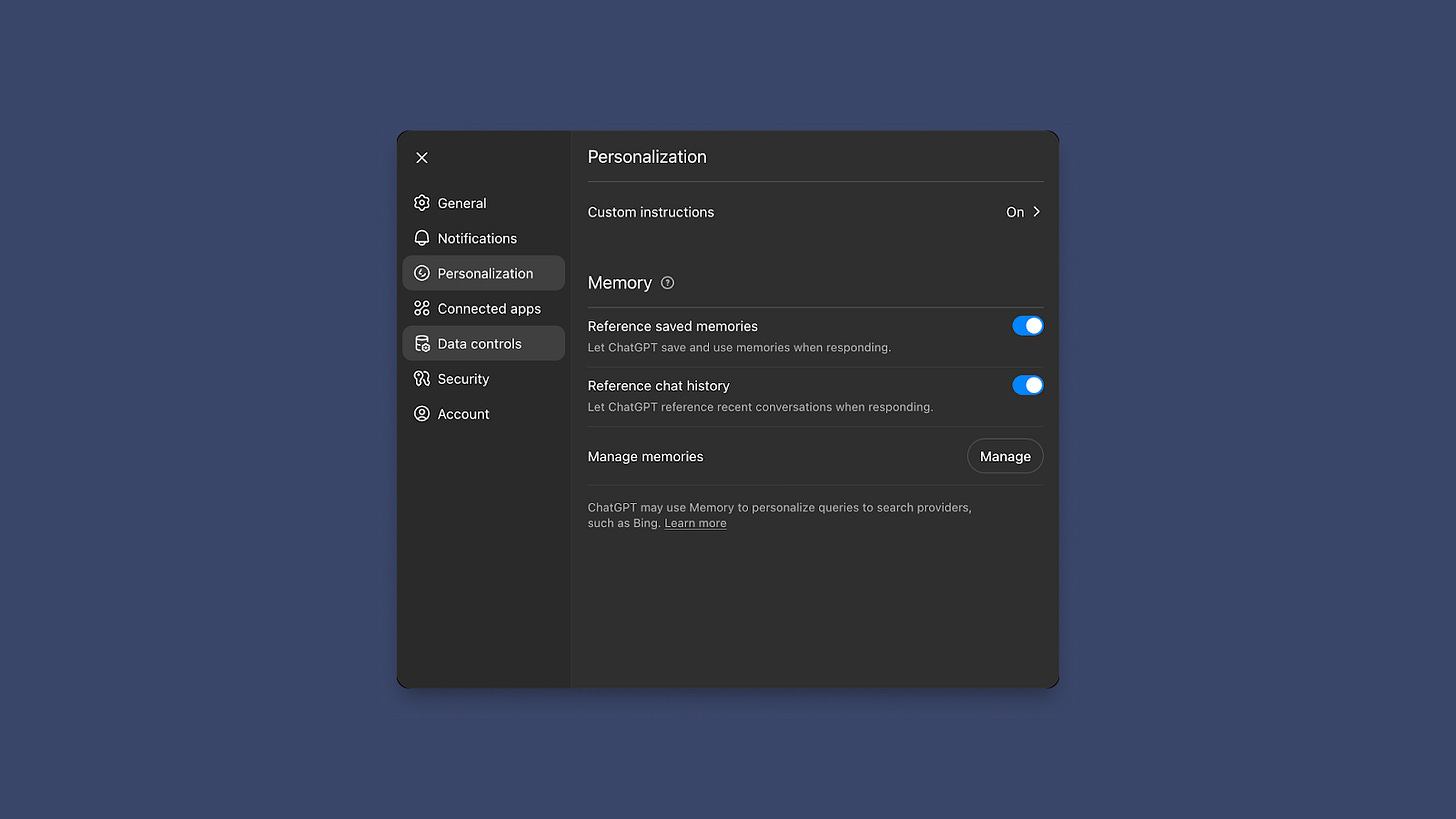

User control — Consider allowing your users to access and customize the sources of information your models are trained on. This can not only reduce errors, but will add much-needed context for the AI to more effectively ascertain user intent in the moment.

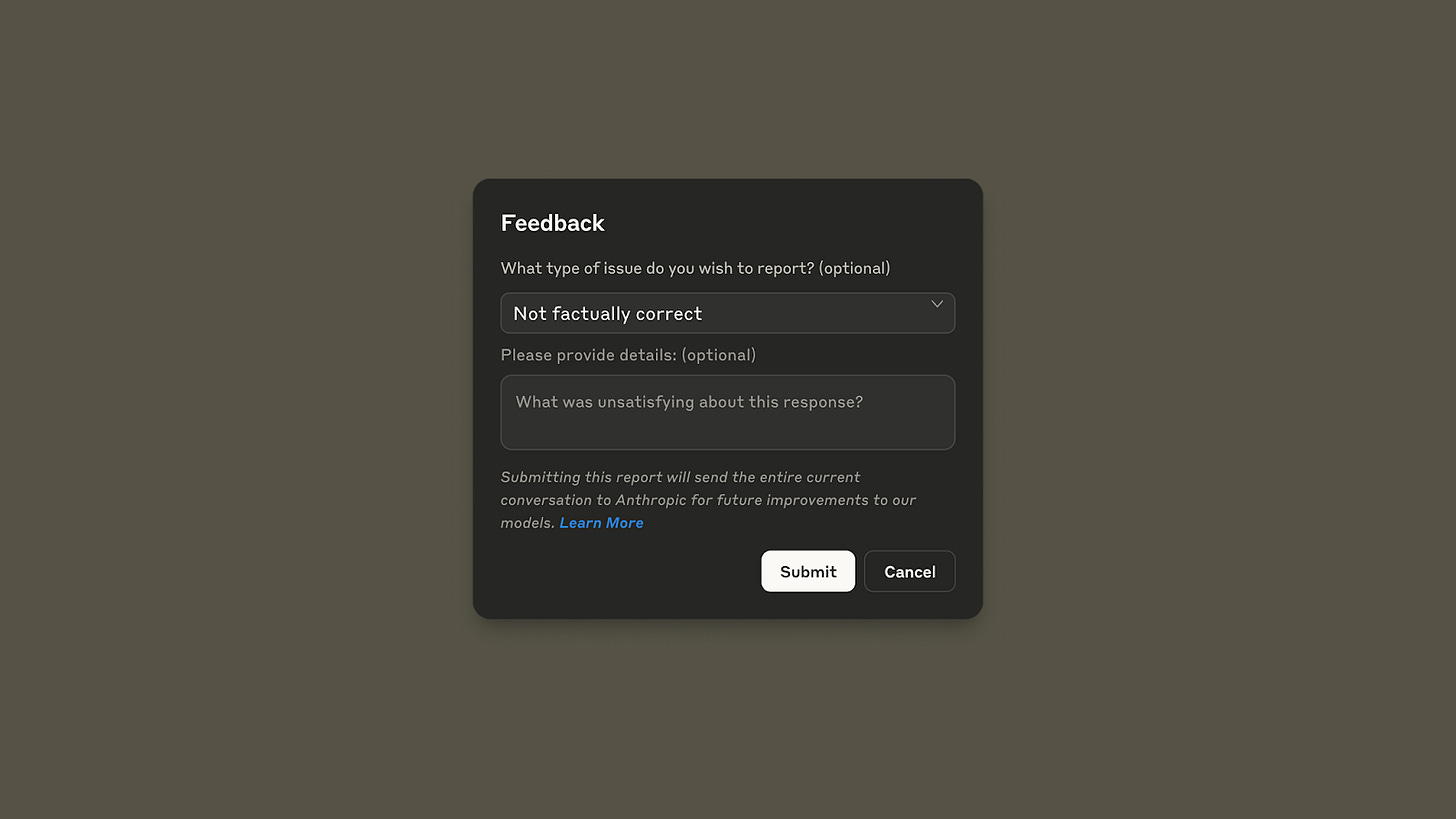

Feedback — Always provide an avenue for users to correct errors. This could take the form of direct feedback in text form, or even something as simple as a rating system. As with any service, this is likely to be your most valuable piece of telemetry, and without it, you risk alienating users and missing out on key insights into how you can improve your product(s).

Alternate models — If large language models are casting too wide a net for your context, there is a case to be made for considering small language models (SLMs) instead. They’re cheaper to run, and for more tightly scoped contexts, could produce more consistent results.

With great power…

While AI has revolutionized multiple industries with the promise of intelligent curation, automation, and delegation, there are inherent risks as well.

Just this week, Mustafa Suleyman — the CEO of Microsoft AI, which oversees all of its consumer offerings — wrote an excellent blog post on his personal site in which he examined the risks of making AI appear too human.

This is where responsible AI principles truly shine. Even the most well-funded products produced by the biggest companies in the world are not immune to the dangers of pitfalls like implicit bias, exclusion, and dark patterns.

It is incumbent upon designers (and their product teams!) to embrace the principles of transparency, user control, and safety. How might your product be used to harm others? What can be exploited at the your expense or that of your users? What’s stopping bad actors from accessing sensitive information? While these can be tough questions to ask in the design and development process, you will save yourself a world of hurt (and potentially your business) with thorough answers.

We are in the middle of a watershed moment in technology and society. This is an exciting and sometimes-terrifying time to be a designer, with opportunity and peril in equal parts at every turn.

We have a generational opportunity and responsibility to push technology and our society forward through thoughtful, intentional design. No pressure.

Very cool! Thanks for the share Will!

Thanks for sharing Will. Super interesting, what’s your take on “humanizing” ai? I mean the fact that you “chat with the ai” makes it a bit human-interaction.

And that’s only for devices ai products. I was shocked when the other I saw for the first time the Uber-delivery-bot carrying food around the street, with eyes in its front screen and also smiling. I think humanizing bots should be prohibited!